Over the weekend of 31 October/1 November we had some visitors, Emma and James, friends we have known for years, but never met in person. Yay Internet. On the Saturday we cycled to Zaanse Schans and did windmills. Sunday was misty, and we went for a walk in het Twiske.

Mixed Media, Sunday 27 December 2015

Long time since the last Mixed Media post, so let me start with the easy ones:

The two albums that have been in near constant rotation over the last month or so are Art Angels by Grimes and Geronimo! by Piney Gir. Grimes is the kind of artist I feel I should have been into, but wasn’t until I heard her track “SCREAM” on Zane Lowe’s show on Beats 1 one day. It’s an unusual piece, featuring Taiwanese rapper Aristophanes, but it was my gateway to the rest of the album, which is all kinds of brilliantly manic. “Flesh Without Blood” is on the short list for my favourite song of 2015.

Piney Gir I first saw supporting Gaz Coombes at Tolhuistuin last month. Geronimo! (2011) is a simply delightful album that’s easy and relaxing to listen to. I’ve also dived into Peekahokahoo, and I’ve been enjoying that too. (“La La La” made me do an aural double-take to make sure I hadn’t accidentally tuned in to a new song by The Go! Team.) And a couple of days ago I finally tracked down her new album, mR hYDE’S wILD rIDE on eMusic. (Yes, eMusic still exists.)

My trend of reading mostly comic books instead of novels continues. I got volume 0 of Howard The Duck’s new run by Chip Zdarsky and Joe Quinones for Christmas. It’s absurd and bonkers, and it still manages to capture moments of insight into the alienation that Howard feels, being trapped in this world. It features cameos from lots of Marvel superheroes, each one a caricature for maximum comic effect. The writing is great, the art is spot-on. It fits right in with the new wave of Marvel comics like Ms. Marvel and Squirrel Girl that wrap up-to-date and very relatable themes in quirky and adorable outer shells to break new ground for an audience hungry for superhero stories beyond the conventional.

Speaking of which, G. Willow Wilson’s run of Ms. Marvel is excellent, as is Ryan North’s and Erica Henderson’s lower profile Unbeatable Squirrel Girl. Hawkeye: Rio Bravo finishes Matt Fraction’s run on the comic very nicely, and Saga vol V continues to be beautiful, tender, and shocking.

Unable to wait for the next trade paperback of Sex Criminals, I found myself buying individual issues of the comic. It’s a slippery slope. Pretty soon I found myself drawn to the striking covers of Paper Girls by Brain K. Vaughan (Saga et al.) and Cliff Chiang, and I’ve been reading them as they’ve hit the stands, too. They’re as good as the covers suggest.

In the world of traditional novels, The Long Way To A Small Angry Planet by Becky Chambers is one of the best SF books I’ve read in the last few years. It reminded me of Firefly and also Bob Shaw’s Ship Of Strangers in a way. You’ve got a quirky ship with a small crew of humans and aliens, all of whom are oddballs and outcasts in their own way, working their way across the galaxy for the hope of reward. But really just because that’s where they want to be, instead of plugging away at a desk job dirtside.

Another Christmas present for me this year was Thomas Levenson’s The Hunt for Vulcan, which isn’t a Star Trek tie-in, but rather a scientific history of the search for the missing planet Vulcan, which Newton’s theory of gravity suggested should be orbiting the sun even closer than Mercury. Einstein’s theory of relativity explained that Vulcan wasn’t there after all, but there was a period in the 19th century where the entire scientific establishment believed the planet should be there, and went on a periodic frenzy to prove by observation that it was there. It’s a fascinating tale. (I wish I could remember who told me about the book, and made me place it on my wish list. Good call, whoever.)

In games, I’ve finished playing through Diablo III (on the XBox One) as Bob the Barbarian. It was a fairly mindless slug-fest. I spent so much time doing all the side-quests that Bob ended up so powerful I pretty much steamrollered every boss battle I encountered. On the other hand, that was exactly what I needed during November and December: a powerful distraction.

Podcasts: I’ve added Eric Molinsky’s Imaginary Worlds to my regular listening after hearing his episode “Fixing The Hobo Suit” on 99% Invisible. I’ve gone back and listened to all of the episodes so far, and they’re well worth the time. I’ve also been tuning in to Hello Internet with Brady Haran and CGP Grey, and Cortex with CGP Grey and Myke Hurley. Limetown’s first season has just ended. I didn’t enjoy the later episodes as much as the first ones. It started feeling over-produced and too much like a radio play than a mock-podcast. It’s a fine line they were trying to walk. I’ll probably come back for season 2 if they make it.

Finally, films. So many that I’m just going with the list format again:

- Contagion Smart, fast-moving, very realistic disaster film. This is what a global outbreak could looks like. Probably not something you want to watch on an airplane sitting next to someone with a sniffle.

- Criminal Dense, low-key con/grifter caper. Don’t be expecting the comedy of Oceans 11. The characters are only barely likeable, and that only some of the time.

- Spectre Third-best Daniel Craig Bond film, after Skyfall and Casino Royale. Good action scenes, but the interpersonal relationships lack depth, and Christophe Waltz is sorely wasted.

- Circle Intense, low-budget, thought-provoking exploration of human nature in winner-takes-all life-or-death circumstances.

- Django Unchained Good, but not essential.

- Sunshine on Leith Funny, tender, uplifting, brilliant.

- Mockingjay part II Wow, that got really dark. I mean, I know the Hunger Games series was dark anyway, but whoa.

- Lockout Guy Pearce is the only good thing in this film, and he is so much better than everyone and everything else in it that, frankly, it’s embarrassing.

- The Man From U.N.C.L.E I’m not generally a Guy Ritchie fan, so this was surprisingly good fun.

- Gravity The special effects are so good that I forgot they were there, and stopped looking for the seams. Intense.

- I Am Legend Somehow I had got the impression that this was a pretty bad film, and had skipped it unti now. Actually pretty good.

- Project Almanac Confusing and messy teen time-travel film; more Primer than Back To The Future. It doesn’t pull it off, but I enjoyed the attempt.

- Out Of The Furnace Beautifully shot, but grim and harrowing slice of small-town decay, violence, duty, and revenge.

- Her Lovely. Sweet performance by Joaquin Phoenix. Interesting counter-point to Ex Machina

- Star Wars (IV, V, VI), the de-specialized editions Some of the special effects Lucas added for the remastered/updated editions improved the originals. But for me, some of the charm of watching the originals lies in knowing how the puppeteers and model-makers brought the old special effects to life in a way that still mostly looks good even 40 years later.

- Star Wars: The Force Awakens Loved it. I keep coming back to how different the character of Poe Dameron was to the disturbed genius Oscar Isaac played in Ex Machina. Makes me want to seek out more of what he’s done. The rest of the film was good, too. The last scene was weird, though – it felt like that should have been the opening of Episode VIII.

Casual Triathlon 2015

On Saturday 7 November Abi and I did another Casual Triathlon. The weather forecast for the day was relatively warm, and we thought that we should take the opportunity on what might be the last good weekend of the autumn. True, it wasn’t cold, but perhaps next time we’ll pay a bit more attention to the wind and precipitation forecasts as well.

Rather than head North-West to the Zaangolf for our swim, this time we cycled South through Amsterdam to the Sloterparkbad. On the way, we passed the construction site at the Oude Houthaven, where they’ve already dug a hole in the river IJ. It’s very Minecraft.

We had got a fairly late start, there was a strong wind, and it was raining. It was after 11 by the time we got to the pool (pre-moistened), and we only just had time for our 1.5km swim before it closed for lessons and clubs. Then we started our 40km cycle. We took a route East through the centre of Amsterdam, past Centraal Station, along the Piet Heinkade, and then onto Zeeburgereiland. We were taking our time, and stopped along the way to take pictures of nice views, buildings, and typography.

Across the IJ, we took advantage of the northeasterly wind at our backs and followed the road around the coast of the IJsselmeer through Durgerdam and Uitdam. Great views. We didn’t go as far as Marken — we only needed to do 40km. We turned West where the Zeedijk splits, and turned onto Gouw towards Zuiderwoude a couple of kilometers later, right into the teeth of the wind.

We took a break at Zuiderwoude at about 15:30.

When we do these walks and cycle trips, we both have our limiting factors. Abi’s hip starts to hurt on long walks; my knee starts to hurt on long cycle rides. After Zuiderwoude, we our route was still straight into the wind. It was a tough slog all the way to Landsmeer where we hit 40km. Our plan had been to cycle on to het Twiske, park our bikes near the boerderij, and do a 10km loop like we did last year. My knee was bothering me quite a bit by that point, though, so we improvised. We got off our bikes, and started our walk in Landsmeer already. We walked the bikes into het Twiske, and parked them near the windmill where Het Luijendijkje forks. That gave us about 2km.

It was after 17:00, and starting to get dark. We figured that we could do a 6-7km loop around the lake, past the sports centre, and then back along the path next to the Ringvaart. Although we had got wet on the ride to the pool in the morning, the rain had stopped by noon, and the wind had blow-dried us very effectively. But part-way through our walk, the clouds hit us with everything they’d been holding back during the day. We weren’t wearing rain gear, and we got soaked to the skin.

Because we’d left our bikes beyond the cut-off back to Oostzaan, we didn’t have much choice but to trudge wearily onwards in the dark and stormy night. The loop was shorter than we’d thought, and we still had almost 2km left when we reached them. So rather than cycling home quickly, we carried on walking, pushing our bikes and looking rather pathetic to all the people who passed us on the way. We hit our 10km milestone just a couple of hundred meters away from home, and we jumped onto our bikes to kill that last final distance as quickly as possible.

Cold, wet, tired, but we did it again.

MartinFest 2015

A few weeks ago I indulged in a week of concerts every evening. They weren’t part of an organised series; it was my own private festival.

It started on Monday 2nd November with Battles in the Oude Zaal at Melkweg. I got there late, and wasn’t able to find a place to stand with good visibility of the stage. I moved a couple of times, but apparently Battles attracts a really tall crowd. I never ended up in a spot where I could see all three band members at the same time.

This was the first time I’d used my new Lumia 930 phone for taking photos at a gig. I took a couple of test shots of the stage before the band came on, and immediately thought that something was wrong. The pictures came out black, with a few blue and purple patches. Very artistic, but utterly useless as an accurate representation of what was right in front of me.

I had been enjoying the Lumia so much until then, that my heart sank at the prospect of not being able to use it for snapping concert pics. I tried tweaking all the settings I could find, but nothing helped. I was watching people around me take pictures of the stage on their Android and Apple phones, and they were coming out fine. The sample photos I took of the sound desk were okay, and the low-light performance of my phone seemed reasonable so long as I wasn’t facing the stage directly. I think it was the lighting: before the band came on, the stage lights were tuned to a uniform harsh blue, and were pointed partly at the audience. The processing algorithms in the Lumia just can’t cope when the lens is being dazzled.

The gig — what I saw of it — was fine. I made sure I always had a good view of John Stanier on drums, at least, and he was a sight to behold. Amazing rhythm.

On Tuesday 3rd it was Dutch Uncles in the Kleine Zaal at Paradiso. I showed up just before 21:30, thinking that I would be lucky to still get a spot with a view. But apart from me, the DJ, and the bar staff, there were…8 other people in the room. I got myself a drink and perched near a wall, feeling slightly weird about standing in the middle of the room all on my own. Did I get the time wrong? I’ve been to some little-attended gigs at Paradiso, but this was a new record for me. I felt really bad about it. I love Dutch Uncles. They’re the band I was going to see twice that week: once headlining their own set at Paradiso, and once in support of Garbage in Tilburg. I wanted them to be received by a typically enthusiastic Paradiso crowd.

Over the next half hour more people drifted in, but not more than another twenty or so. A few members of the band came out from backstage and greeted some friends of theirs in the audience, which made me feel even worse. But they were undaunted. At 22:00 they mounted the stage, and put on a simply amazing gig. As the concert in the Grote Zaal finished, more people made their way upstairs. By the end of the concert I reckon there were some 60-70 people there. Not a huge crowd, but they didn’t care: they put on a show, owning the stage with their energy, and playing a 14 song set with an encore. That’s pro.

- Babymaking

- Fester

- Face In

- Decided Knowledge

- Cadenza

- Threads

- I Should Have Read

- A Chameleon

- Jetson

- Nometo

- Upsilon

- The Same Plane Dream

- Flexxin

- Dressage

Encore:

- Steadycam

And I was front and centre the whole time, cheering and grinning and applauding and loving every minute of it. It reminded me of seeing Dananananaykroyd at that same venue back in 2011. They were going to play their hearts out no matter what.

Three things I discovered at that gig. First is that singer Duncan Wallis really does dance like that. I had thought that the video for Flexxin was specially choreographed, but no:

Second: dual xylophones! Listening to their music after the gig, it’s perfectly obvious: there are xylophones all over the place. Before, I hadn’t given it any thought. The characteristic wooden sound of the instrument had just been an integral part of the band’s sound. But not only is it part of their sound, the xylophone is also out at the front of the stage. Duncan Wallis and Pete Broadhead play it together, from opposite sides. It’s a fun alternative to guitar solos.

Third: I had totally overlooked “Dressage” as a track on their album Cadenza. It’s fantastic live.

After the gig was over, I stayed behind near the merch stand for a few minutes so I could tell the band how much I enjoyed the show. I bought a T-shirt too, of course.

So Wednesday 4th November was supposed to have been Garbage at 013 in Tilburg, but that didn’t quite work out. At short notice, the Oostzaan town council had organized a public consultation for that evening regarding their decision to put up a group of 100 refugees for a 72 hour period. Taking in refugees is a controversial subject in these parts. Trouble had broken out at a similar meeting in nearby Purmerend a few weeks previously. Abi and I are both very much in favour of our gemeente taking in refugees. We expected that the meeting here in Oostzaan would be quite well attended, and we wanted to show our support for the council’s decision, even if it was just in the form of our presence.

I was very much in two minds throughout the day, though. I had been looking forward to this week of concerts for a long time, and there was no way that I could attend the meeing and still make it to the gig. Another argument against going to the gig was that late nights on the the previous two evenings had left me quite tired, and it was going to be at least a 90-minute drive to Tilburg each way. Late in the afternoon I called up Abi and talked it through with her. There wasn’t going to be a public vote, so would it really matter if I wasn’t actually present at the meeting? At the end of the call I had made up my mind: I would go to the concert.

As soon as I hung up the phone, I regretted the decision. That told me it was the wrong one. So I changed my mind and didn’t go after all. Instead, we went to the meeting at the Grote Kerk, which was so oversubscribed that they had to split it into two sessions. We were at the first one. The mayor and representatives of the council explained their plans, then then opened the floor to people who had previously registered to speak. Some spoke very eloquently in favour; some spoke against. (I’m sure you can imagine the rhetoric.)

And then there were a couple of randos who took the opportunity to berate the town council for not just making a decision and getting on with it. Seriously. Two of them.

I bit my tongue rather than booing the opponents, because that didn’t seem to be the way. The meeting was well-run and polite, even when a man who clearly wasn’t a local took over the speaking slot of a woman who hadn’t turned up, and used it to spout the familiar xenophobic PVV demagoguery. His bile was applauded, not as weakly as I had hoped, yet not as strongly as I had feared. I’d guess that the mood was about 60:40 in favour of the plans. There was a sentiment, expressed by a couple of speakers, that taking in a mere 100 refugees for just 72 hours was a half-hearted effort, and that we could and should do better.

After the meeting was over, there was coffee (of course) in the community hall next to the church, and an opportunity to speak with representatives of the council and volunteer organizations. We spoke to a coordinator for the refugee crisis centre in Zaandam, and we put our names down as volunteers for the Oostzaan shelter.

The next day I was at the Django Under The Hood conference during the day, my first tech conference in a long time. (I’m trying to think what my last one was…maybe dConstruct in 2013? Insofar as dConstruct is actually a “tech” conference…) And then the Foo Fighters at the Ziggo Dome in the evening!

Support was Trombone Shorty and Orleans Avenue, familiar if you’ve seen the Sonic Highways series. They were very good.

I love the Foo Fighters. This is the concert that I had planned to see in Edinburgh in June, but which was cancelled because of Dave Grohl’s injury. The ticket for Edinburgh was not cheap; the ticket for this one was even more expensive. (After-market, what are you gonna do.) I think their album Sonic Highways is one of their best ever, and the accompanying documentary is an amazing piece of work. My expectations of the Ziggo Dome had been set quite high by Taylor Swift’s concert earlier in the year. And my expectations of concerts for that week had been set high by Dutch Uncles on Tuesday.

So when I say I felt it was “just a gig,” doesn’t mean that it was a bad concert; just that I might have been in the wrong frame of mind.

I like being close to the band on stage, and stadium gigs only deliver that experience to a select few. I like watching drummers play, but the video system at the Ziggo Dome suffers from huge and distracting lag. It was almost impossible to watch Taylor Hawkins any time he appeared on the screens. I like a loud concert, but the sound felt overwhelming and distorted. They only played two songs from Sonic Highways (“Something from Nothing” and “Congregation”). The rest was a collection of their greatest hits — of which there are many!

Dave Grohl clearly loves playing to audiences in the Netherlands, and the fans here love him right back. The fact that he played most of the show from his custom-built rock throne, with his leg still in a cast, only added to his legend as a great performer. At one point he asked people how often they had been to a Foo Fighters gig. Going by the cheers, most people had been to several, if not many. For us first-timers, he jokingly called out “where have you been all this time?” I’m sure he didn’t mean that in a bad way, but it made me feel out of place again.

I’m not sure what conclusion I can draw here. Am I happy I went? Yes. Do I feel the ticket price was “worth it”? Sure. Is that only because I can now tick off the Foo Fighters from my score card? Maybe. Would I go and see them at a big stadium again? Hmm. What are the chances of me ever getting to see them at a Paradiso-sized venue? LOL.

Friday 6th December was Gaz Coombes at Tolhuistuin (“Paradiso Noord”). I’d been there once before to see Blonde Redhead. Nice small venue. I was back at the DUTH conference during the day, spending some time watching talks, some time behind the FanDuel table, and some time walking around the sponsors’ room talking to the other people behind the tables. At the close of play I took the ferry across the IJ and had dinner at the THT restaurant before going in to the gig.

I was still quite early for it, and I struck up a conversation with the woman at the merch stand. We chatted about the new Indiestadpas for 2016: a special limited edition year-long subscription ticket offered by Paradiso that gives access to at least 52 concerts (by small and upcoming bands) for a mere €25. That’s €25 for the whole year, not for each event. I had got the promotional email earlier that day, and I was excited about it. She also mentioned that Gaz Coombes would be coming out after the gig to sign things. I had my eye the nifty Matador poster, and I said I’d be back for it later.

Before Gaz Coombes came on, we were treated to a warm-up by Piney Gir and her band (who later turned out to also be Gaz Coombes’ band, with Piney and her fellow vocalists as his backing singers). I had never heard of her before, but she put on a charming set of what I’d characterise as a fusion of indie bubblegum with alt-country Americana. A little bit of Zoey Van Goey and a little bit of Decemberists adds up to a fun set with a few infectious songs that stuck in my head even on first listen. “River Song”, with the line “I’m just a girl from Kansas City, I’ll follow you home, I’ll follow you home” kept coming back to me until I downloaded her 2011 album Geronimo! and listened to it all the way through again. I regret not getting a copy of her new album, mR hYDE’S wILD rIDE, at the gig because it doesn’t appear to be available for streaming or sale through any of my usual channels yet. So much for the assumption that I don’t need a CD player any more.

Gaz Coombes played an amazing set. A mix of fast and slow songs, all from his solo albums, nothing from the Supergrass era. This was in marked contrast to the Foo Fighters the previous evening. If a group stays together for long enough, it eventually turns into its own covers band. I love to hear the artists’ new material, though, and that’s what I was there for. Songs like “Needle’s Eye” and Detroit” give me goosebumps almost every time I listen to them, and hearing them loud and live was mesmerizing. There could have been no better way to close the evening, and the week, than with the joyfully extended version of “Break The Silence” Gaz used to end the show.

Set list:

- Needle’s Eye

- Sub Divider

- Oscillate

- Buffalo

- One Of These Days

- The English Ruse

- White Noise

- The Girl Who Fell To Earth

- To The Wire

- Seven Walls

- Detroit

- 20/20

- Hot Fruit

Encore:

- Matador (extended)

- Break the Silence

I waited around afterwards to buy the Matador poster and get it signed. It’s going to maintain pride of place on my wall for quite a while.

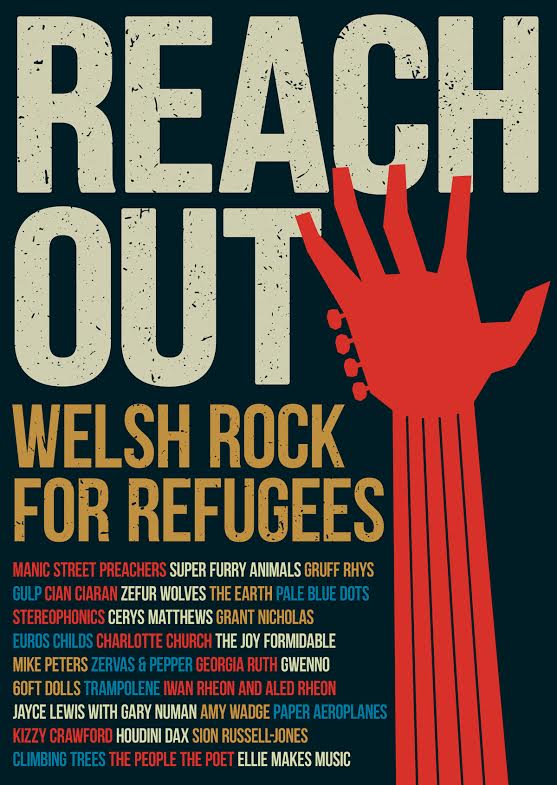

Welsh Rock for Refugees

Posters

One of the reasons I had for reducing the junk in my office and cutting the bookcases in half was to give me some wall space to hang up some concert posters.

(The Ting Tings, Dan le Sac vs. Scroobius Pip, Gaz Coombes, Neko Case, Dananananaykroyd, The Joy Formidable.)

I am inclined to go a bit mad at the merch stand after a gig, but buying T-shirts can be a bit of a hit-or-miss affair. Tour T-shirt sizing is all over the map, and quality varies greatly. With some more space on my walls, I might start picking up more posters instead.